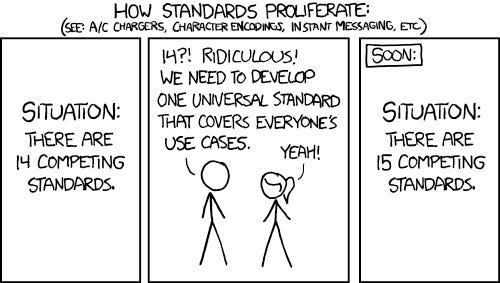

Why AI Model Context Protocol is like a Fire Hydrant

And why demos aren't engineering

This article isbased in a conversation with Owen Goode, who owns and runs JB Analytics a marketing technology consulting firm in Texas where deep technical expertise is paired with lived business experience.

Owen spends his time helping organizations—many of them marketing-led—navigate the gap between what modern technology can do and what it should do.

He doesn’t approach AI as a novelty or a product feature, but as infrastructure that has to earn trust.

AI is a high horsepower engine without the rest of the car to support it.

AI has reached the stage where almost anything is possible in isolation.

You can generate copy.

Summarize reports. (kinda)

Draft emails.

Spin up a demo landing page over coffee.

Yet, for most real business teams, very little of this feels solid.

The tools are impressive, but brittle.

The demos shine, but the moment you try to wire AI into the systems that actually have business data flow—your data warehouse, your CRM, your workflow tools—things slow down.

Permissions get fuzzy. Auditability decreases. Trust evaporates.

That’s not a model problem.

It’s a systems problem.

And it’s why a quiet, unglamorous connector pattern called the Model Context Protocol (MCP) matters more than most people realize.

A Detour Through Fire Hydrants

Fire hydrants in the United States are not engineering marvels on their own.

The interconnection has been the same for over 100 years.

They are not optimized for maximum flow for each municipality. They are not custom-fit to each climate or water composition.

From a narrow, local perspective, you—the reader—could probably design something better.

But no one does.

Instead, we have a universal standard1. Any fire truck, from any jurisdiction, can pull up to any hydrant and start moving water immediately. No adapters, negotiation, or guesswork.

That choice—to standardize the hookup rather than perfect the part—created a network effect that matters far more than small efficiency gains.

When a building is on fire, the best connector is the one that works everywhere.

MCP is that kind of decision.

What MCP Actually Is

MCP is not a new model or AI tool.

Its like a fire hydrant—it connects any AI model and any web information.

It defines how AI-powered applications connect to the outside world.

At a technical level, MCP is an open protocol that lets AI hosts—things like chat apps, IDEs, or internal tools—connect to external servers that expose three kinds of capabilities:

Resources: data the AI can read

Tools: actions the AI can take

Prompts: reusable patterns for how work should be framed

That’s it. No magic or intelligence. Its just a shared design pattern.

If APIs are the pipes that move data between systems, MCP is the hydrant fitting that lets AI connect to those pipes safely and predictably, regardless of who built the system on either side.

This is where a lot of early explanations go wrong. MCP is not “a better API.”

Your CRM, CDP, and warehouse still have APIs.

MCP standardizes how AI systems discover and use those APIs without each integration becoming a one-off project.2

Or, to borrow from the interview, MCP is “an evolution from an API,” not because it’s stricter, but because it’s looser in the right way.

As Owen put it:

“An API is very [rigid;] this endpoint must connect to this endpoint.

MCP is more like saying we can take data of any kind over this protocol and connect this application and that application.”

That “looseness” is the advantage with AI tooling.

Why Context Beats Cleverness

Owen offered another analogy that’s worth keeping in mind:

“LLMs are the world’s most forgetful journalist.”

A journalist without notes can still write. Sometimes even well. But the work gets better—more accurate, more grounded—when they’re given context, sources, and constraints.

Modern AI models are the same.

They are very good at working with what’s in front of them. They are much worse at guessing what should be in front of them.

MCP exists to solve that problem at a systems level.

Instead of stuffing more and more instructions into a prompt and hoping nothing important gets dropped, MCP gives AI a reliable way to ask:

What do I have access to?

What am I allowed to do?

How is this work usually done here?

That’s not about raw intelligence (you can’t actually just use more powerful math to solve every problem)

It’s about orientation.

And reliable, predictable orientation is what turns a clever demo into a dependable AI tool.

Front End, Back End, Same Problem

In the interview, Owen described two main ways MCP-style connections show up:

“You’re seeing it in two ways, like a front end and a back end. And those two are all you need because they scale.”

On the front end, this looks like assistants that actually know your business. Not just your brand voice, but your customers, your history, your constraints.

An AI that can read previous campaign performance, check CRM notes, and draft a message that makes sense now, not in the abstract.

On the back end, it looks like what Owen half-jokingly called “bureaucracy.”

“You can build a team of bureaucrats to accomplish a larger goal. LLMs are wonderful at it if they have proper documentation.”

This is the unsexy work: reconciling data, writing summaries, updating systems, documenting decisions. Humans are bad at it. AI, given clear rails, is very good.

But only if the hookups are standard and the data is fundamentally solid.

What Production Actually Looks Like

Let’s make this concrete.

Imagine Snowflake sits at the center of your marketing data—not everything, but the things you trust. Curated views. Clean definitions. Data someone has already decided is safe to use.

An MCP server exposes those views as resources. Read-only at first. No surprises.

A small set of actions—run a scoped query, generate a summary, open a Jira ticket—are exposed as tools. Each one documented. Each one bounded. Some requiring confirmation before they run.

Before every user-prompt is a context prompt to standardize how work starts: how you summarize campaigns, how you draft briefs, how you explain results to executives.

Every interaction is logged back into the warehouse. Not for surveillance, but for learning. What did the AI touch? What did it suggest? What did humans accept, reject, or edit?

This is not autonomous marketing.

It’s assisted work, done faster3 and with fewer dropped threads, because the system knows where it is and why it exists.

As Owen put it plainly:

“You must give an agent the context of why they’re there and what they need to know.”

That’s the same set of skills as properly managing human employees.

Conclusion

The hydrant standard was not glamorous. It didn’t win awards for elegance. But it saved—and saves—cities, because it made coordination possible under stress.

MCP is the same idea.

It’s not about smarter models or clever prompts.

It’s about agreeing on the interface, even when that means giving up some local optimization. It’s about treating AI as part of an ecosystem, not a pile of demos.

Seen this way, MCP isn’t hype. It’s a sign that the industry is starting to think in systems again.

And systems thinking—slow, careful, often boring—is how technology stops being a toy and starts being infrastructure.

What’s Next

Now that MCP has been adopted by almost all major tech players, they’re moving onto another Anthropic suggestion: Skills.

Skills are tiny Standard Operating Procedures [SOPs] that explain to an AI tool how to do something.

If you had a “Turn on the Lights” Skill, it would tell the agent how to open your front door, where the light switch was, and how to flip it to “ON”.

As more companies adopt this structure, I’ll add more detail for a future article.

Almost entirely. There are a few historical or unusual extant types, but they’re covered by a few common adapters.

APIs seem easy, but they’re enough of a pain in the rear that a buddy, Charlie Alsmuller, has an entire company just setting up your API handlers for edge cases in Ecomm.

Owen does call out the danger with this too: Work Slop

AI makes it easy to produce more. More copy. More slides. More output. That can feel like progress, but isn’t.

Owen had a name for this:

“Work slop.”

Not AI slop. Work slop. Because the responsibility still sits with the human who ships it.

“A one-page handcrafted document that gets the point across is infinitely more valuable than 75 pages of AI-generated nothing.”

MCP doesn’t fix a lack of design thinking. In fact, it exposes it faster. Structure has a way of doing that.

But if you already care about clarity—about goals, constraints, and trust—then it helps refine a useful tool.