Spec-Driven Development: How AI Is Forcing Software Developers to Grow Up

It Turns Out Physical Engineering Tools Aren’t Outdated for Software

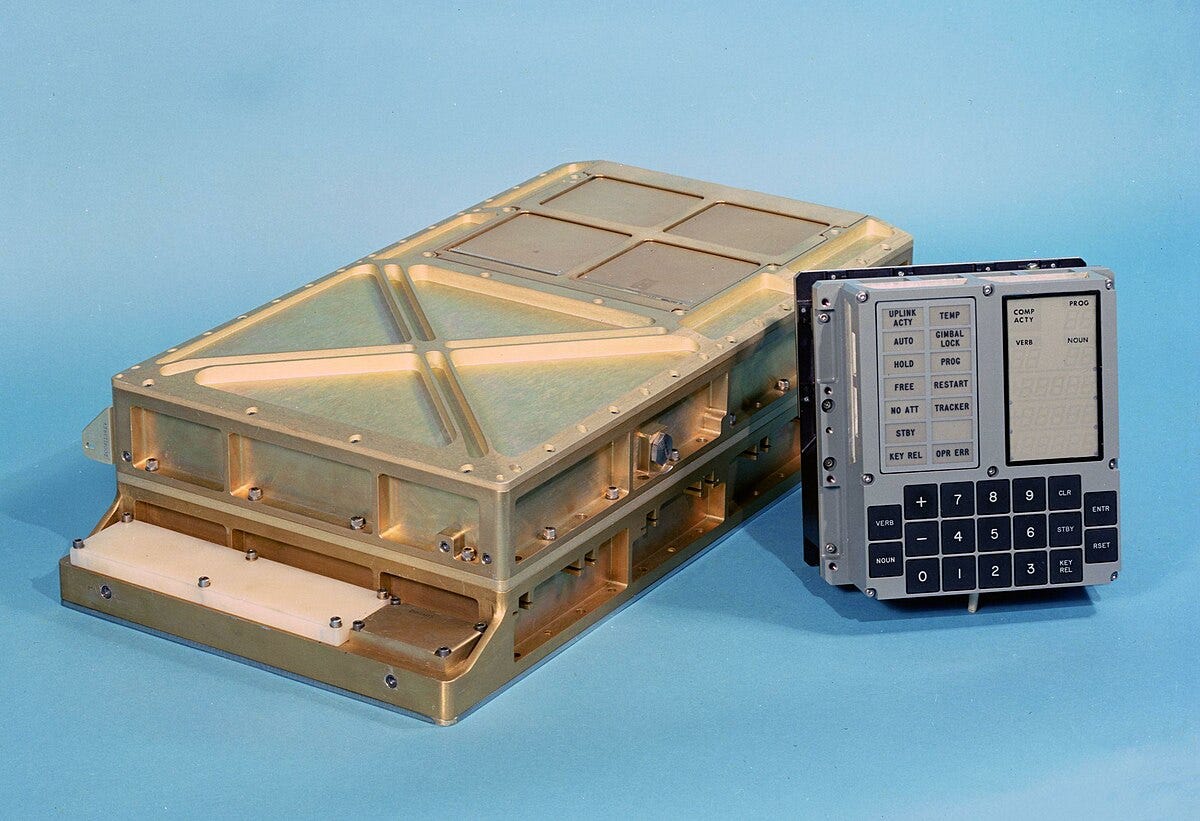

Somewhere between the first hatchets made from flint and the Apollo Guidance Computer, humanity discovered a simple truth: Precision is tough. It’s tougher without planning.

Before a part is milled, before a chip is soldered, before a car rolls off the line, someone has to decide what “right” looks like.

In physical (real) engineering, this decision takes the form of a specification (spec). A spec is an expected output result that precedes any production.

For physical production, you can’t patch a product once a customer has received it—you need it to be right by the time it leaves a factory every time.

For decades, software seemed exempt from this discipline (kind of, see below).

You could “just code.”

If something broke, you’d deal with it later.

If the product felt off, you’d pivot. The digital world’s beauty was that mistakes cost nothing but time1. The medium was infinitely forgiving.

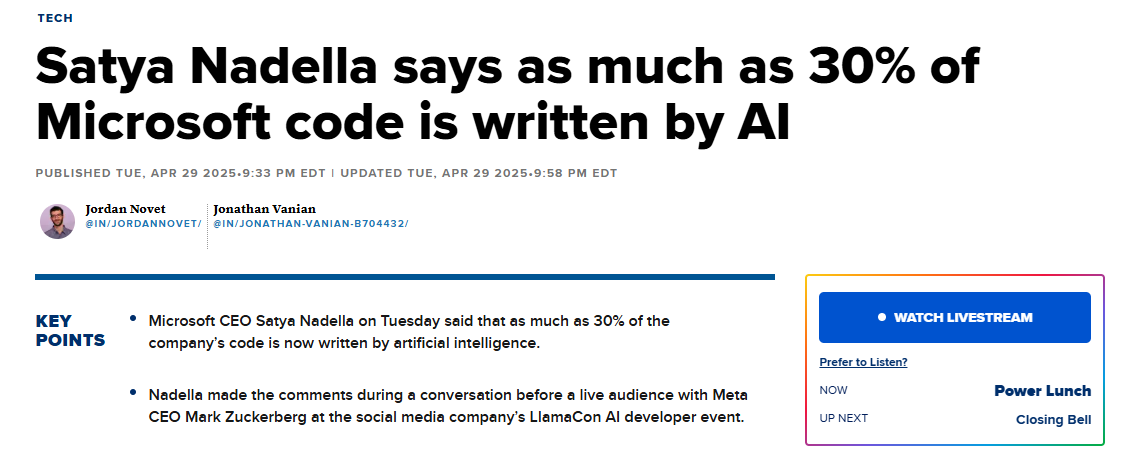

Then AI showed up and the amount of code produced started skyrocketing.

When Everyone Developed: The Great Vibe Coding Experiment

The first wave of AI-hand-holding development ~vibe coding~ was intoxicating.

You could describe what you wanted, get back code that looked plausible. And (often) even see it run.

It felt like magic.

For prototypes, it was magic. Why spend a week messing with a new python library you’ll never use again when a model could pull together a working demo in minutes?

For quick wins, marketing sites, or internal tools2, this “describe and hope” approach works ‘well enough’.

But AI is creeping its wormy fingers into production systems—CRM extensions, data pipelines, and even Microsoft OS.

As its influence grows, cracks will appear. Code that “looks right” fails in quiet, unpredictable ways.

Seemingly small changes in phrasing or context produce completely different architectures.

Small changes to one function, prompt, or context reverberate across the entire codebase.

Every developer who’s tried to turn an AI-generated snippet into a real function knows the feeling: it’s like discovering your house only has “decorative” plumbing to plug into.

The problem isn’t that the AI tool is wrong.

It was that we’re asking poorly-formatted questions to a computer.

How to Give Clear Directions and Avoid Workplace Miscommunication

Don't ask bad questions or give poor instructions. Try this out

You Can’t Manage What You Don’t Understand

If this dynamic feels familiar to developers, it should.

Instead of receiving a vague, poorly thought-out request from a stakeholder, you’re now giving it to a hapless (but hardworking) junior AI engineer.

For decades, business managers believed that any project—marketing, logistics, or software—could be led through process and pressure. You didn’t need to understand how the thing worked; you just needed to ensure your teams hit their milestones. The result was a cancerous growth of status slides, RAG updates, and meetings about meetings.

AI development is now replaying the same pattern. The idea that “anyone can code with AI” is the latest incarnation of “anyone can manage with dashboards.”

It assumes that visibility is the same as understanding, and that tools can replace the tacit knowledge professionals build over years of practice.

A less-experienced developer might see an AI-generated prototype and think, great, we’re halfway done.

An experienced developer sees the same thing and thinks, we haven’t started yet.

Without domain understanding, both believe they’re speaking the same language—but one’s seeing the awe of stakeholders with a prototype, and the other knows that there are innumerable, ill-defined changes in the future.

The Return of the Blueprint

To solve this problem, AI companies are encouraging spec-driven development. SDD is the quiet3 reintroduction of rigor to AI workflows.

In September, GitHub announced an open-source toolkit called Spec Kit, a framework designed to bring structure back into AI coding. It’s not flashy. It’s a disciplined, four-phase process:

Specify what you’re building and why. Write it down clearly.

Plan the technical architecture and constraints.

Task the work into discrete, verifiable units.

Implement with your coding agent of choice.

Each phase acts as a checkpoint: you don’t proceed until the previous one’s validated.

The result is less high-speed magic, and more reliable manufacturing.

What GitHub is really doing here is rediscovering the blueprint is more important than raw output.

In their framing, the specification becomes executable. AI can generate, refine, and enforce specs.

It’s the same principle that guided NASA’s flight software teams in the 1960s: intent must be fully defined before implementation. You don’t build the rocket and hope the math checks out.

You define success, build tests across all relevant dimensions, and only then create the code.

Old Lessons, New Medium

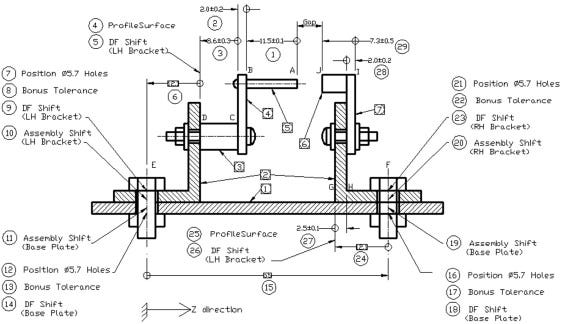

This isn’t new. The manufacturing world has lived by these rules for about a century.

A machinist doesn’t “vibe” a titanium bracket into existence for a plane.

They can’t even start the machine before they have a clear idea of where to go.

They follow a drawing that defines every tolerance and material property.

If the result deviates by more than a (specified) tolerance, an inspection flag is raised.

Statistical process control systems monitor the line, not to punish outliers, but to understand and predict it—to trace cause and effect.

Spec-driven development applies the same logic to software. The “spec” defines intent; the AI enforces consistency.

The developer becomes less of a typist and more of a process engineer, adjusting parameters, reviewing outputs, and maintaining alignment between what was promised and what’s built.

It’s an ironic shift after decades of mockery from software engineers: the rigor of physical manufacturing is now applied to the incredible flexibility of code.

And it’s possible only because AI can now handle the huge amount of effort that made doing so impractical before.

The Manager’s Blind Spot

For marketing technologists and digital leaders, this shift isn’t just about tools. It’s about domain literacy.

Managing a team of AI-assisted developers without understanding specs is like running a factory floor without reading the control charts.

You might see the output, you can see the rejects, but you can’t tell if the process is healthy.

A leader who understands spec-driven development will ask different questions. Instead of “is it done yet?” they’ll ask “has the spec been validated with stakeholders?” Instead of “can we launch next week?” they’ll ask “are we confident this aligns with the enterprise contraints?”

These aren’t just semantic differences—they’re cultural ones.

They signal respect for how things actually get made. But without giving developers a blank check and blind trust.

The irony is that this literacy doesn’t require learning to code.

It requires learning to think like an engineer—to see complexity not as chaos, but as something that can be molded, constrained, measured, and broken to your will.

Conclusion: How I Stopped Worrying And Learned To Love The Hum of the Machine

When an assembly line is tuned correctly, it hums happily.

Every station, every process, every measurement aligns toward a single output.

Spec-driven development is software’s way of finding that hum again.

It’s a reminder that sophistication isn’t the opposite of simplicity—it’s what happens when you refine your chaos into order. The managers who understand that—who learn to get their systems into tune—will be the ones who thrive in the AI era.

Because as it turns out, you still need domain knowledge to build enterprise software.

AI didn’t make engineering obsolete.

It just made good software engineering start following the rules that normal engineers already have.4

Obviously not really, but in the smaller scale.

Things where pretty matters more than functional

Not admitting to investors that the code produced can be substandard, but rather introducing a new way to maintain the inevitable mistakes that are introduced.

I’m not a regulatory-minded guy, but one can easily imagine that the sheer output of code from AI development tools in the next few decades will be astronomical.

Without a human eye on each line, having more ‘traditional’ bulk examination processes will mean that we start thinking of development as less deterministic and more probabilistically.

In the same way we have ‘approved’ parts for aerospace metals, we’ll likely start to have ‘approved’ spec formats and structures for high-impact enterprise coding.

You can’t examine every atom in a bar of iron being smelted. Before AI, code could examine the equivalent of each atom. Now that we’re moving towards whole ‘bulk’ code chunks, we’re going to need the same practices for examination in software dev.