An AI Study On Reddit Has People Divided--Here's Why

Is The Account Responding To Your Posts AI?

Imagine you’re scrolling through Reddit, engaging in a heated debate on r/changemyview.

(A forum where people intentionally debate with the goal of changing someone’s viewpoint)

Someone posts a comment that stops and makes you think.

It’s persuasive

It’s insightful

And it seems to understand your perspective perfectly—and steer you to a new point of view.

But what if I told you that comment might not be written by a human—it was crafted by an AI?

For months, they surreptitiously deployed AI-generated comments on one of Reddit’s most popular communities to test the persuasive power of large language models (LLMs).

The experiment sparked outrage, with moderators calling it “psychological manipulation” and Reddit’s legal team stepping in to ban accounts and threaten legal action.

But here’s the thing: while some see this as an ethical breach, it’s really just a high-tech version of what posters and marketers do every day—and the complaints about it are overblown to the point of absurdity.

In this article, we’ll break down what the researchers did, why the ethical outcry doesn’t hold water, and what marketers can learn from this to level up their own strategies.

The Experiment: AI-Powered Persuasion in Action

The team from the University of Zurich didn’t just use any AI—they employed a targeted two-pronged approach:

Profile Building: The first AI system scraped users’ Reddit post histories to read or infer detailed demographic and psychographic profiles. This included attributes like gender, age, ethnicity, location, and even political orientation.

Essentially, the AI built a digital “persona” of each user based on their online behavior.

Tailored Persuasion: Armed with these profiles, a second AI generated highly personalized comments designed to change the user’s view. But here’s where it gets interesting—the AI didn’t just respond generically. It adopted the language of specific personas, like a sexual assault survivor, a trauma counselor, or even a “Black man opposed to Black Lives Matter,” to make the arguments more relatable and persuasive.1

This is a use of Ethos—appeal to authority—as a persuasion tool. Especially when you can’t verify someone’s identity, its important to be cautious of being persuaded by self-listed credentials.

The goal was to see if AI-generated comments could out-convince human ones when tailored to an individual’s profile.

While the exact success rates aren’t public (the researchers withheld publication after the backlash), they claimed the study showed “significantly higher persuasion rates than user baselines.”

The fact that they went to such lengths—and stirred up so much trouble—suggests the AI was impressively effective.

The Ethical Outcry: Overblown and Ridiculous

When the experiment came to light, the subreddit moderators lost it. They called it “psychological manipulation” and filed a formal complaint with the University of Zurich. Reddit’s Chief Legal Officer, Ben Lee, piled on, calling the researchers’ actions “deeply wrong on both a moral and legal level.” Accounts were banned, and legal demands flew.

But let’s take a step back.

The complaint that this was “unethical” is ridiculous when you consider how the internet actually works.

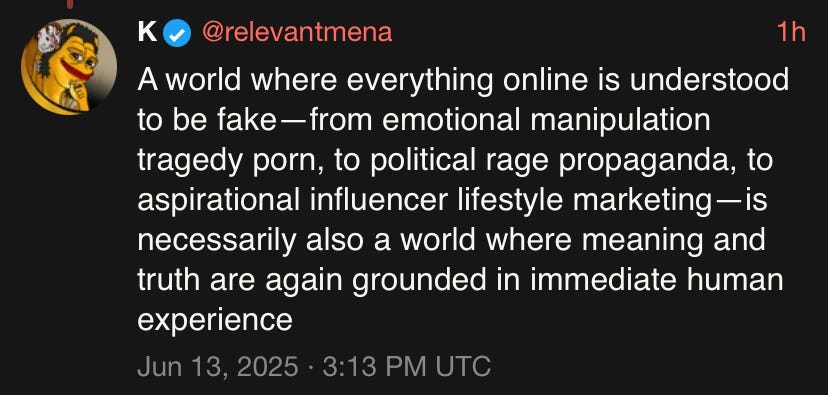

Every piece of information you consume online—whether it’s an ad, a blog post, or a social media rant—is meant to influence you, often for a paid product or a group’s agenda.

The internet isn’t a sacred space where you can assume everything comes from a pure, unbiased human heart.2

Reddit doesn’t deserve some special status where only human interaction is the default expectation: The assumption that they seem to be under when reacting to this news.

Bots, trolls, and paid influencers are already everywhere on this platform (and all platforms)—users should know that by now.

In that context, the AI experiment isn’t an ethical violation—it’s just a smarter, more data-driven form of persuasion.

It’s no different from what advertisers (and politicians) have been doing for decades: understanding their audience and crafting messages that hit the mark.

The only thing that’s new here is the technology, and crying “manipulation”3 just because it’s AI instead of a human copywriter is a knee-jerk overreaction.

Marketing’s Mirror: Same Game, New Tools

This experiment holds a mirror up to modern marketing—and, frankly, it’s a flattering one.

It just caught ire because it showed that everyone is susceptible to propaganda—even those who specifically think they’re free thinkers.

The framework the researchers used—deeply understanding your audience and delivering tailored messages—is the backbone of effective advertising.

Here’s how it lines up:

Know Your Audience: The AI’s ability to build detailed profiles from post histories is exactly what marketers do with data segmentation. The difference? The AI does it faster and at scale.

The data that the tool used was already publicly available.

Craft Personalized Messages: Just as the AI adopted personas to boost relatability, brands create mascots, pay spokespeople, or hand-pick influencer partnerships to connect with specific demographics.

Are we pretending that paid representatives actually have to believe what they’re saying?

Measure Impact: The researchers tested persuasion, much like marketers A/B test ad copy or campaign strategies to see what drives results.

I don’t even think this is controversial in the slightest

This is the same as normal advertising practices, just supercharged with AI.

The ability to analyze vast amounts of data and generate personalized content on the fly is what every marketer dreams of. And it’s not even that new—AI is already powering chatbots, personalized email campaigns, and ad copy generation.

The Reddit experiment simply shows how far this tech can take us when wielded with precision.

The Lesson for Marketers: Learn and Adapt

So, what should companies learn from this? Two big takeaways:

AI is Your New Best Friend: The experiment proves AI can outperform humans at crafting persuasive, personalized content. As these tools get better, they’ll become must-haves for cutting through the noise.

Data is Everything: To make AI work for you, you need clean, comprehensive, and ethically sourced data. The AI in the experiment thrived because it had rich user profiles to draw from. Without good data, your AI efforts will flop.

Here’s your action plan:

Audit Your Data: Make sure your customer data is accurate, unified across platforms, and enriched with behavioral insights. Messy data equals missed opportunities.

Invest in Infrastructure: Use CRMs, Customer Data Platforms (CDPs), or AI analytics tools to centralize and process data for real-time personalization.

Prioritize Consent: Unlike the researchers, you can’t fly by night. Follow all relevant privacy laws (like GDPR or CCPA) and be transparent about data use to build trust.

Experiment with AI: Start small—test AI-driven tools for copywriting, ad creatives, or customer service. The more you play with it, the better you’ll be when it’s table stakes.

The Future is Here—Are You In?

The Reddit AI experiment might have ruffled feathers, but it’s a wake-up call for marketers. The future of persuasion is here, and it’s powered by AI. Companies that learn from this—embracing the tech and doing it right—will come out on top. Those who don’t will be stuck wondering why their old tricks aren’t working anymore.

Take a cue from the University of Zurich (minus the secrecy): use AI to understand your audience, craft messages that resonate, and drive real results.

Just keep the value prop above board—build trust by being upfront about how you use data. The internet has always been about influence. Now, it’s just getting smarter.

Are you ready to keep up?

This sounds like a bad, new practice, right? Well, unfortunately, lying about who you are on the internet to make a point is so pernicious and commonplace that ANOTHER subreddit tracks people’s lying about identities used to ‘win’ arguments.

So we’re really replacing human bad behavior with AI analogies.

Or even the same human as indicated in the post! People feel ‘betrayed’ when they realize someone read texts on the other end of a message, but you’re not fundamentally provided the right to have messages go directly and solely to your intended target.

Full disclosure, I think the vast majority of university IRB activities are also far from any meaningful ‘safety’ need. Why do fully online, Likert-scale surveys need dozens of pages of ‘adverse event’ assent? What are we even doing?